Discover our latest news & updates

A technical deep-dive into LLM context management, the computational limits of context windows, and why every AI tool's 'context graph' solution might just be clever marketing around semantic search and RAG.

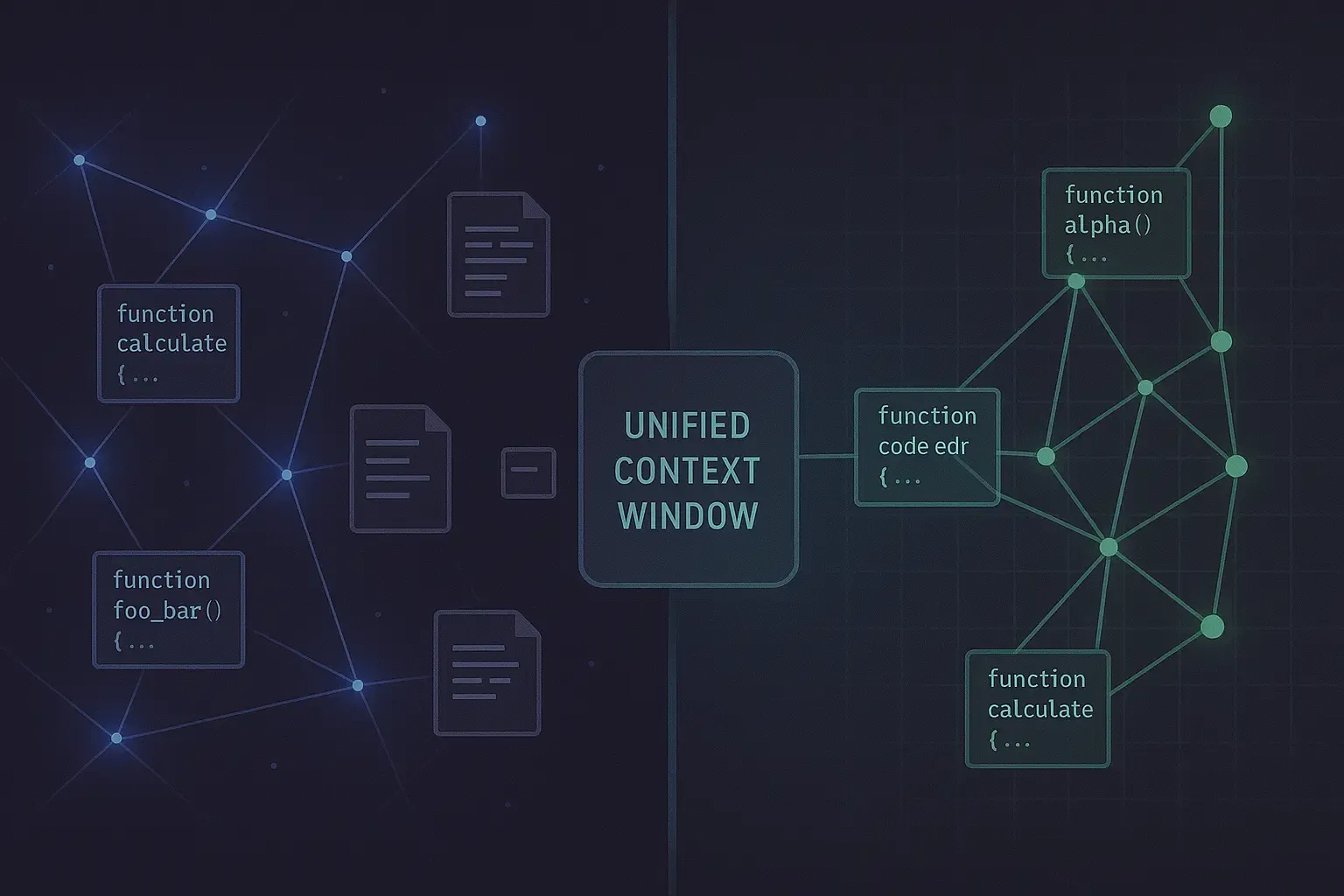

Individual layers may pass, but systems often fail at the seams. This blog details how to conduct holistic 'System-in-the-Loop' tests, measuring how retrieval noise compounds into generation errors across 25+ repositories. We provide a blueprint for evaluating the full journey from a vague natural language query to a multi-repo pull request.

Retrieving nodes is only half the battle; the LLM must synthesize code that adheres to cross-repo constraints. This post explores measuring faithfulness, checking execution-level correctness against internal SDKs, and using LLM-as-a-Judge to verify that generated code respects the security and type contracts of separate repositories.

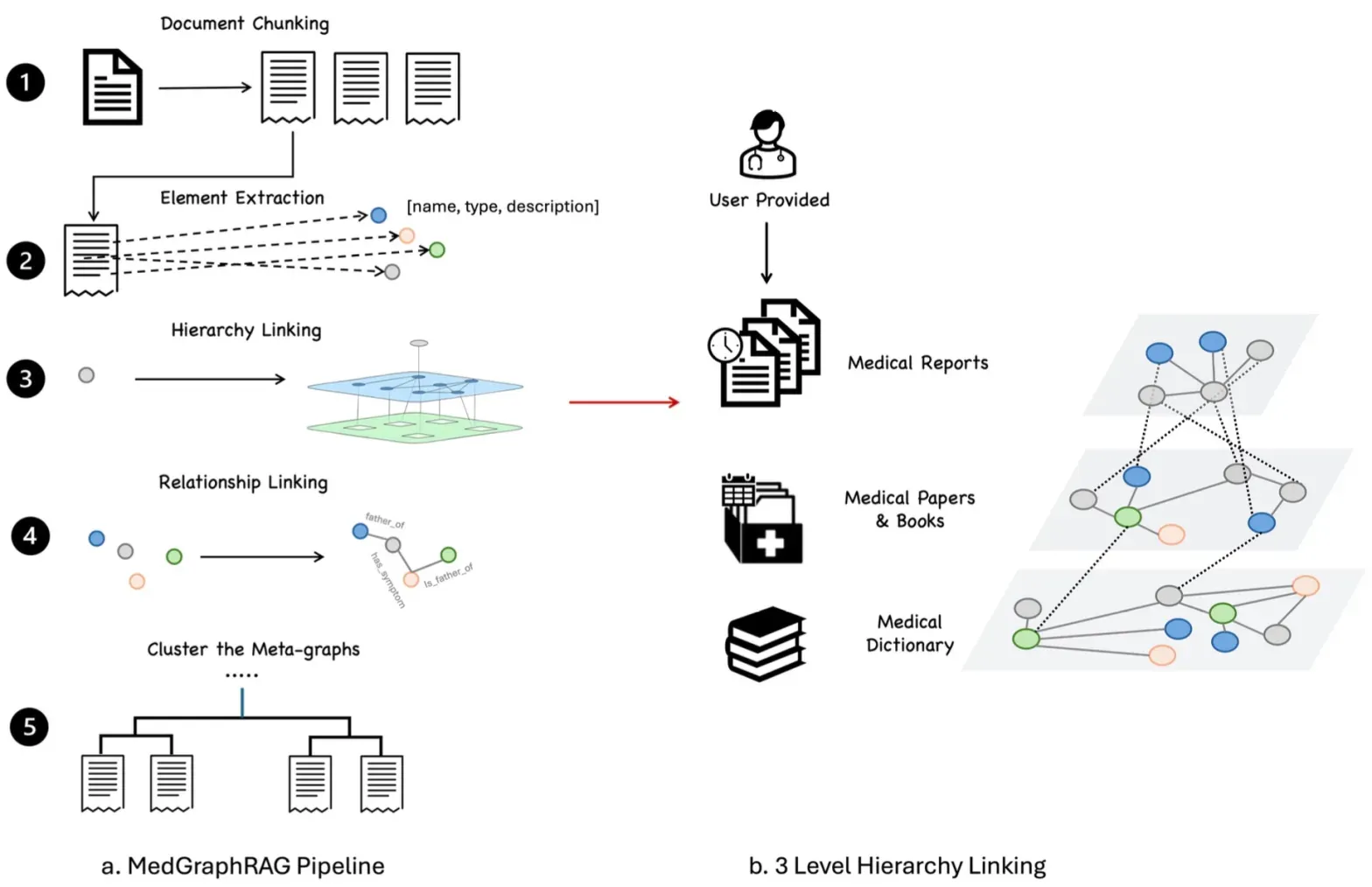

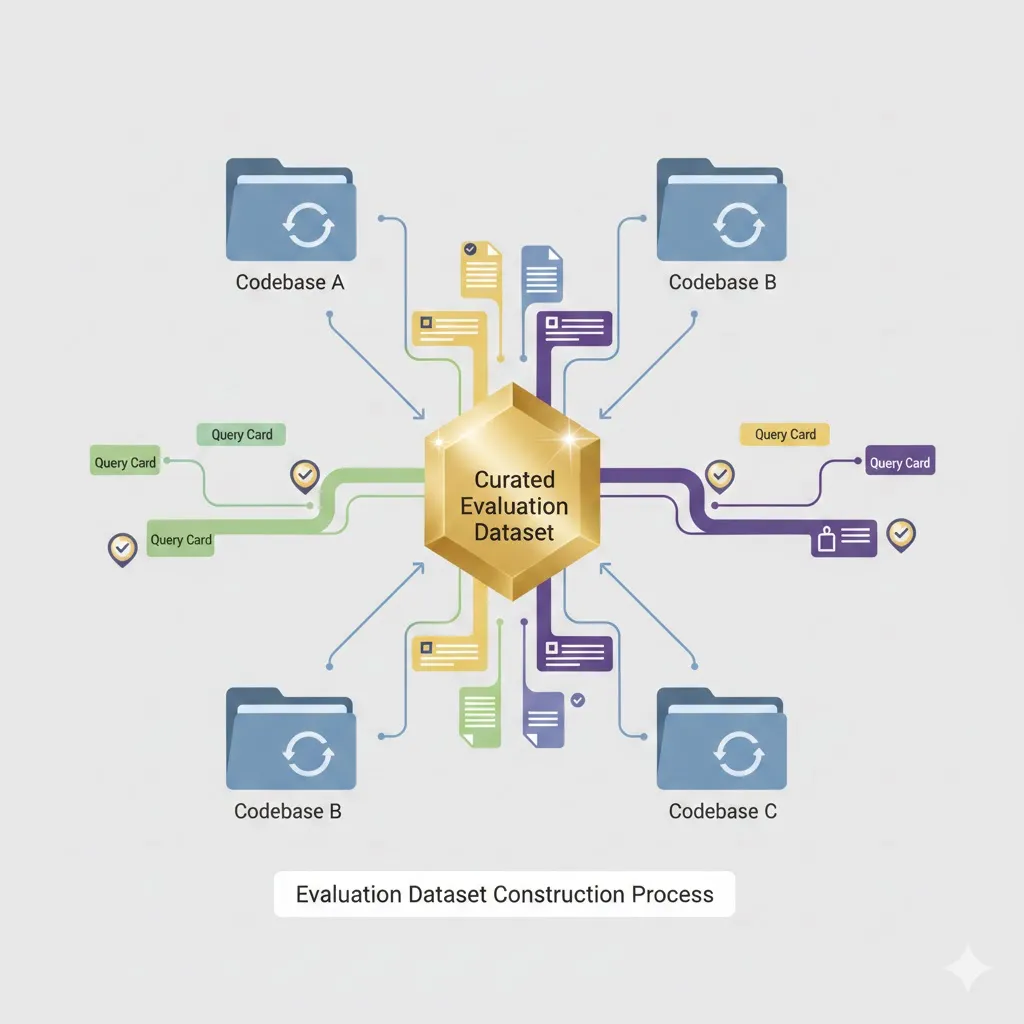

A practical guide to measuring retrieval quality in GraphRAG systems operating across multiple repositories. Covers gold-standard design, graded relevance metrics, cross-repository precision, graph traversal evaluation, and version coherence to ensure correct multi-repo retrieval.

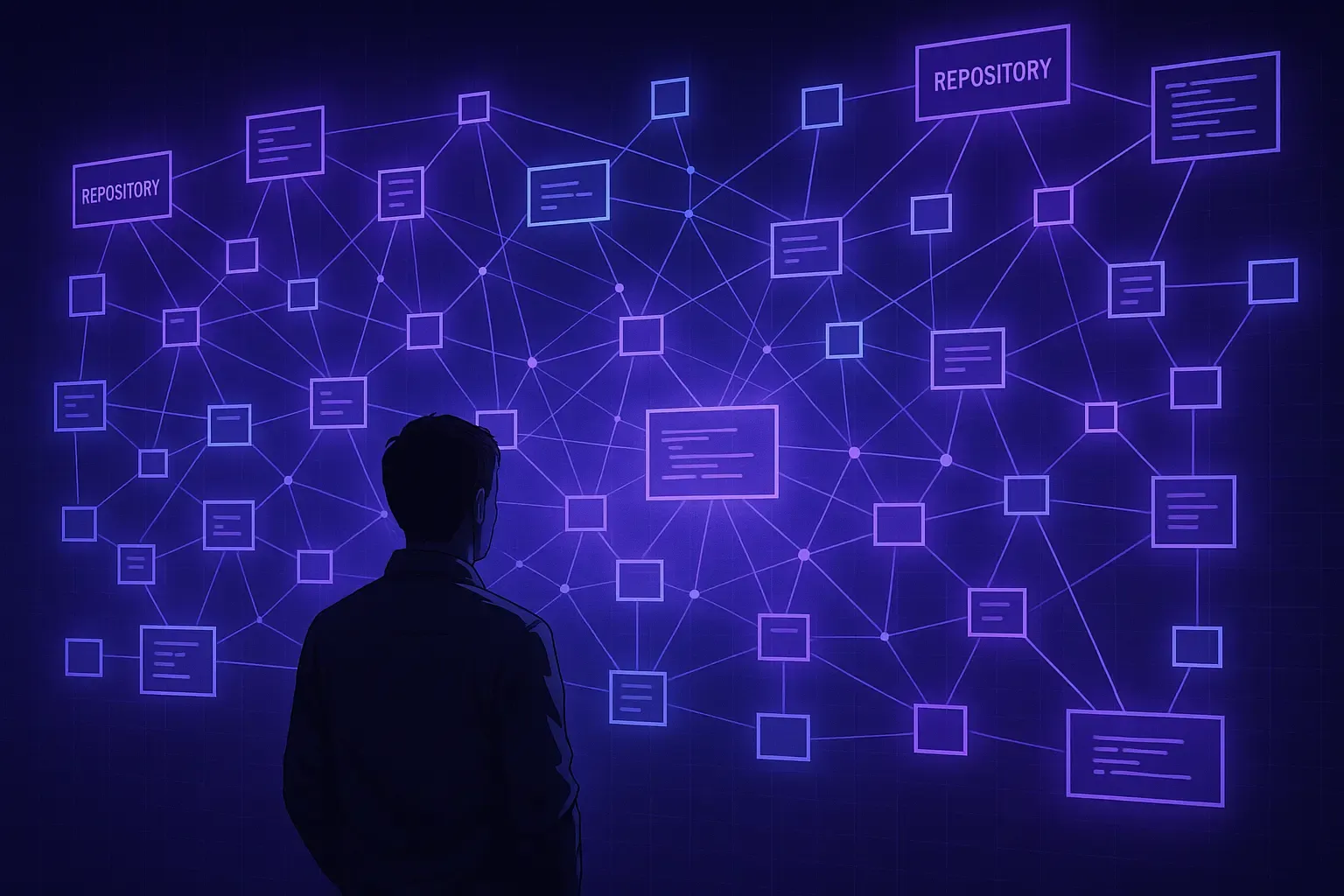

Before creating evaluation datasets for a GraphRAG system, you must understand your codebase topology. This post walks through building repository dependency graphs, classifying repos by role, mining real developer questions, and identifying high-priority code regions that stress cross-repository retrieval.

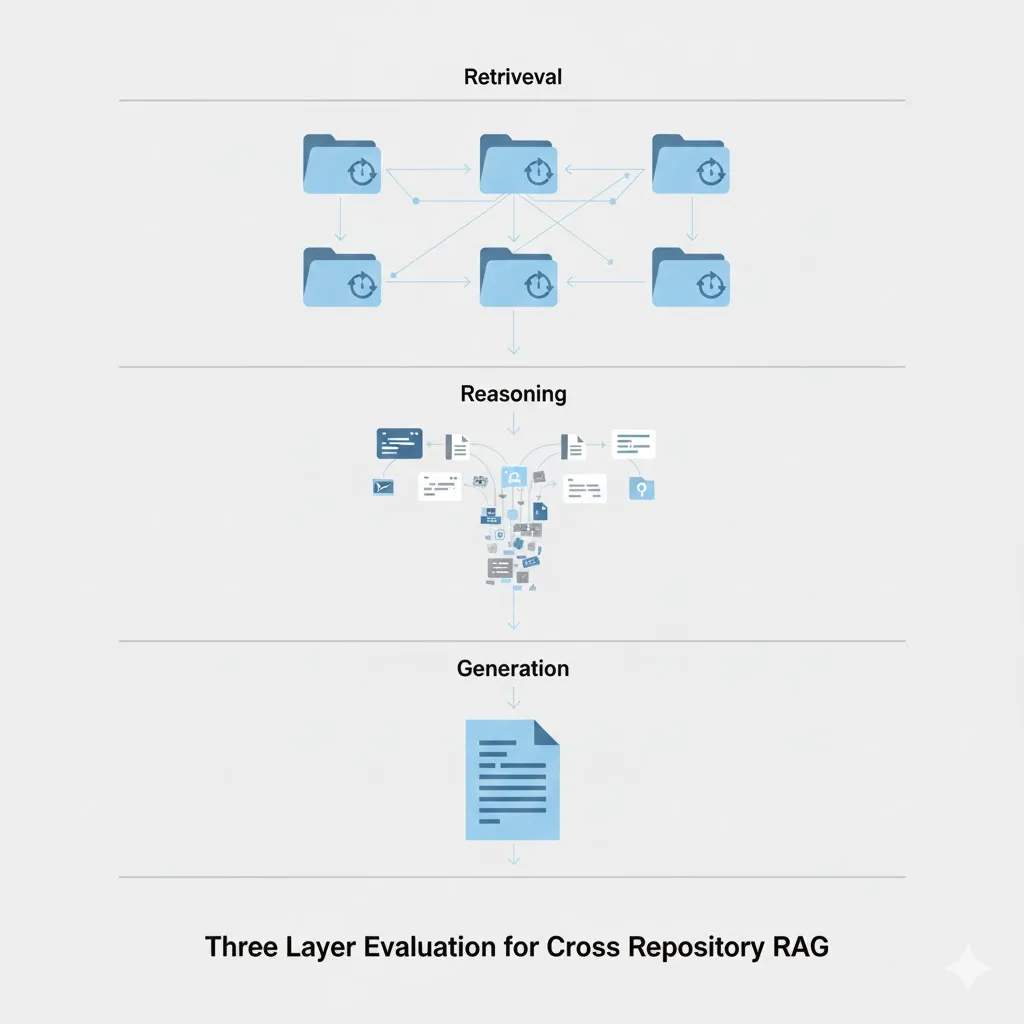

A comprehensive evaluation architecture for GraphRAG systems operating across multiple repositories. This post introduces the retrieval → reasoning → generation framework with specific metrics, target thresholds, and implementation code for each layer.

Before creating evaluation datasets for a GraphRAG system, you must understand your codebase topology. This post walks through building repository dependency graphs, classifying repos by role, mining real developer questions, and identifying high-priority code regions that stress cross-repository retrieval.

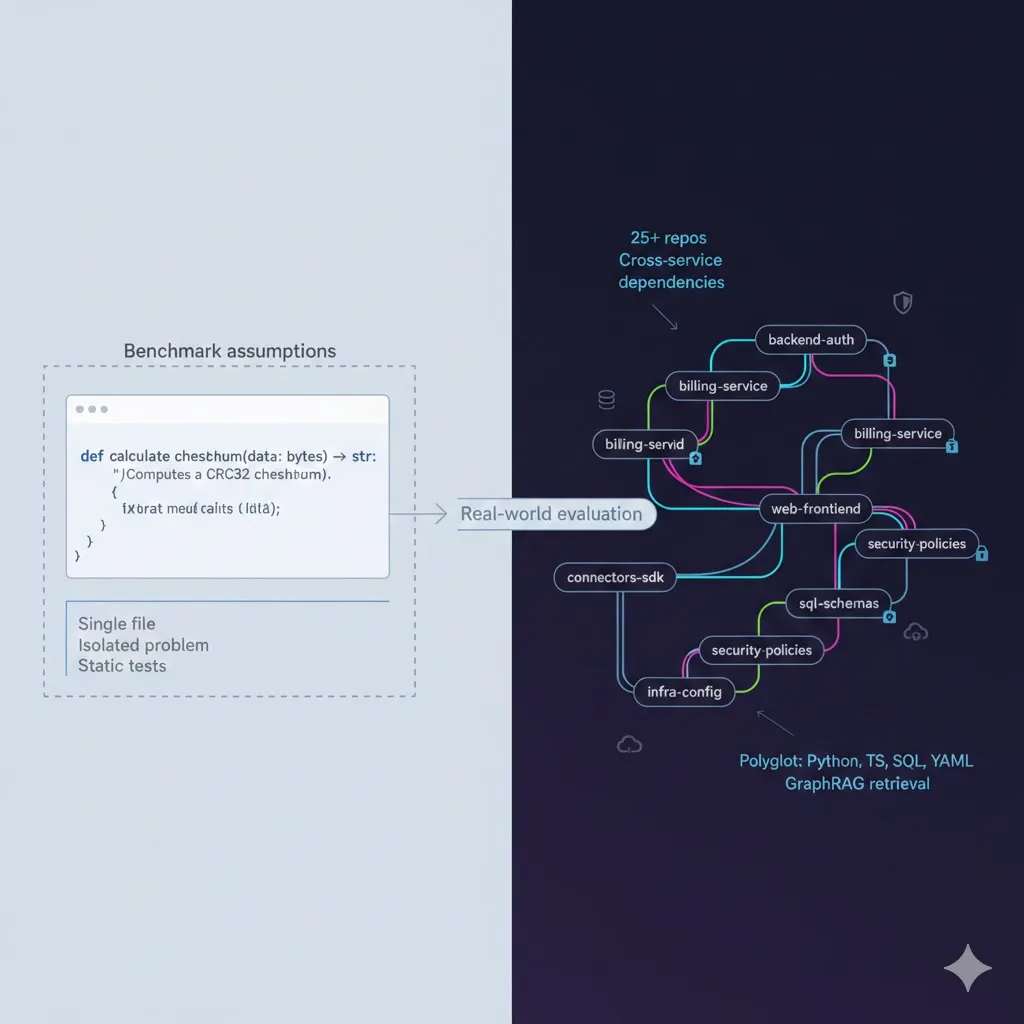

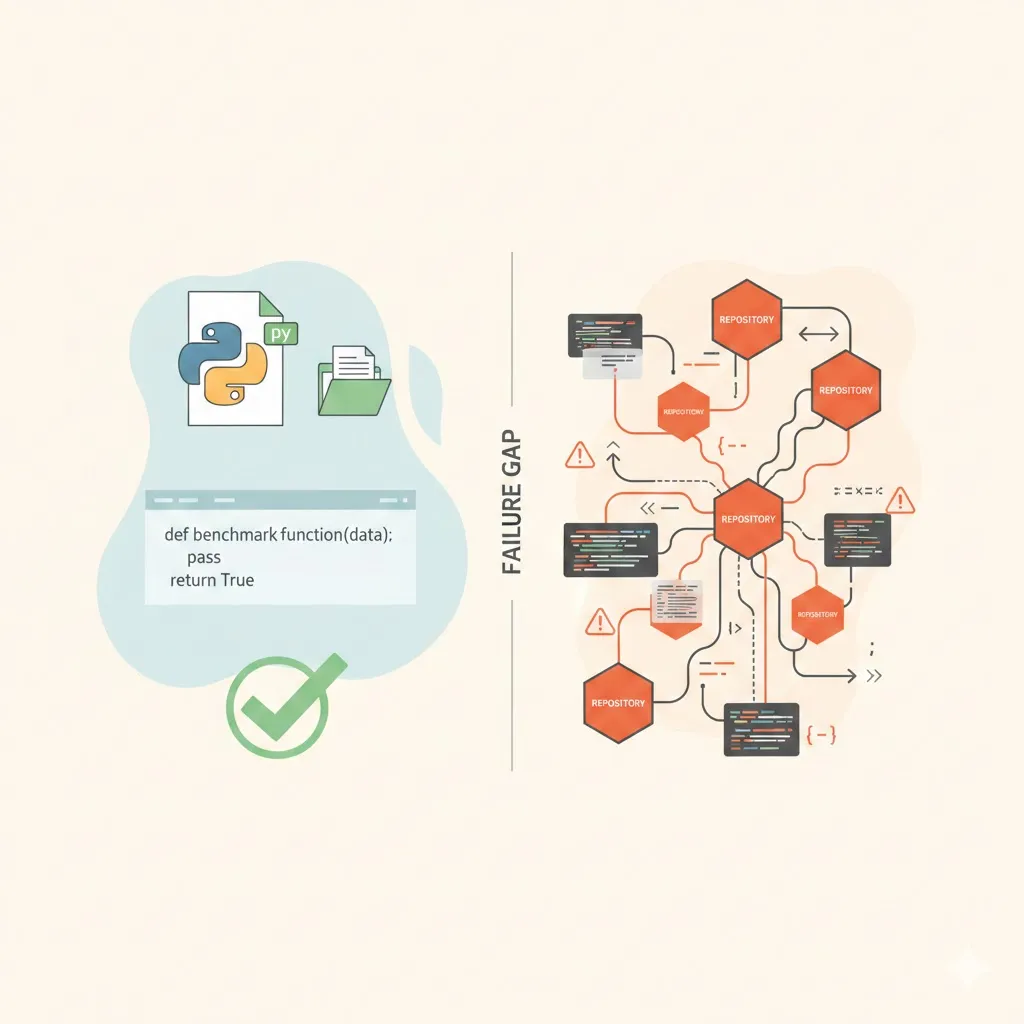

Existing benchmarks like HumanEval, MBPP, and SWE-Bench assume single-file, isolated context and cannot evaluate GraphRAG systems that reason across tens of thousands of files, multiple repositories, and evolving services. This post explains the unique failure modes in cross-repository retrieval and what metrics actually matter.

Vector search finds relevant code but misses the blast radius. Learn how combining lightweight code graphs with RAG creates cross-repository context that makes code changes across 50+ repositories predictable—without heavy graph infrastructure.

Move beyond keyword matching with semantic code search. Learn how embeddings, function-level understanding, and knowledge graphs transform code discovery—plus why citations matter for enterprise teams who can't afford hallucinated answers.

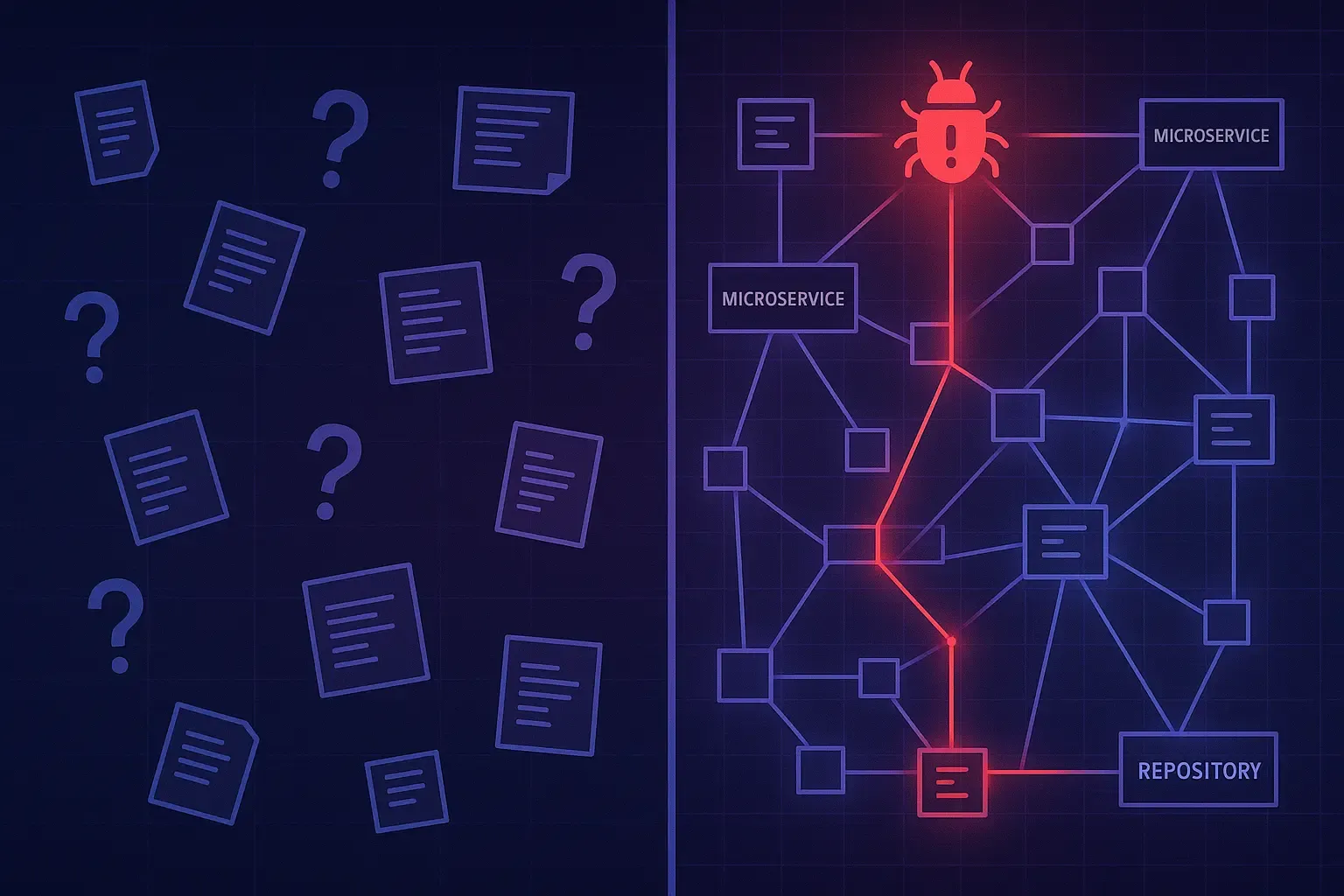

Tracing a production bug across microservices shouldn't take hours of repository hopping. See how enterprise teams use multi-repo code search to follow call paths, identify root causes, and debug cross-service failures in minutes instead of days.

Discover how to integrate the Model Context Protocol (MCP) into your Developer Copilot for real-time data fetch, secure action workflows, and seamless AI-driven developer automation.

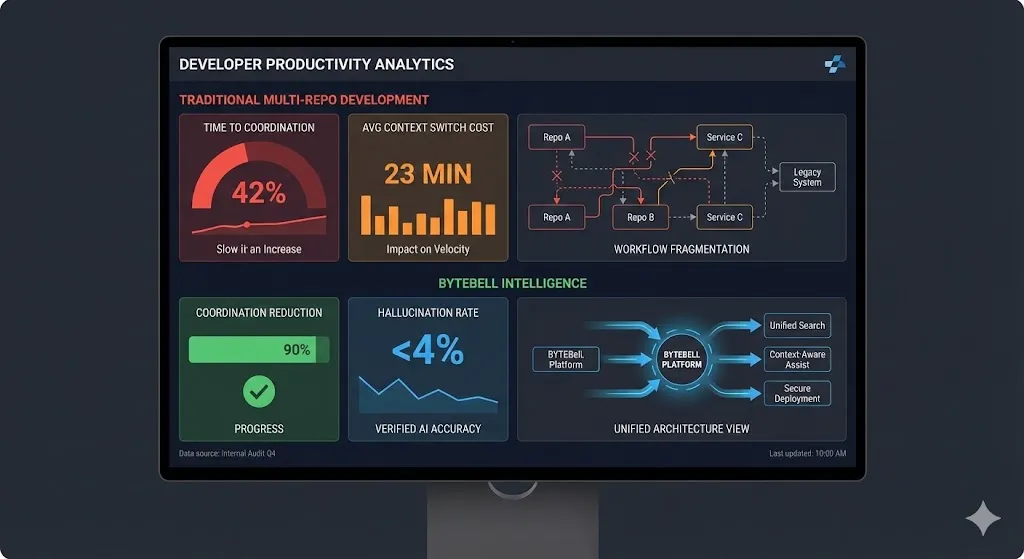

GitHub Copilot, Cursor, and Sourcegraph can't handle cross-repository dependencies. See why ByteBell's multi-repo intelligence solves what they can't.

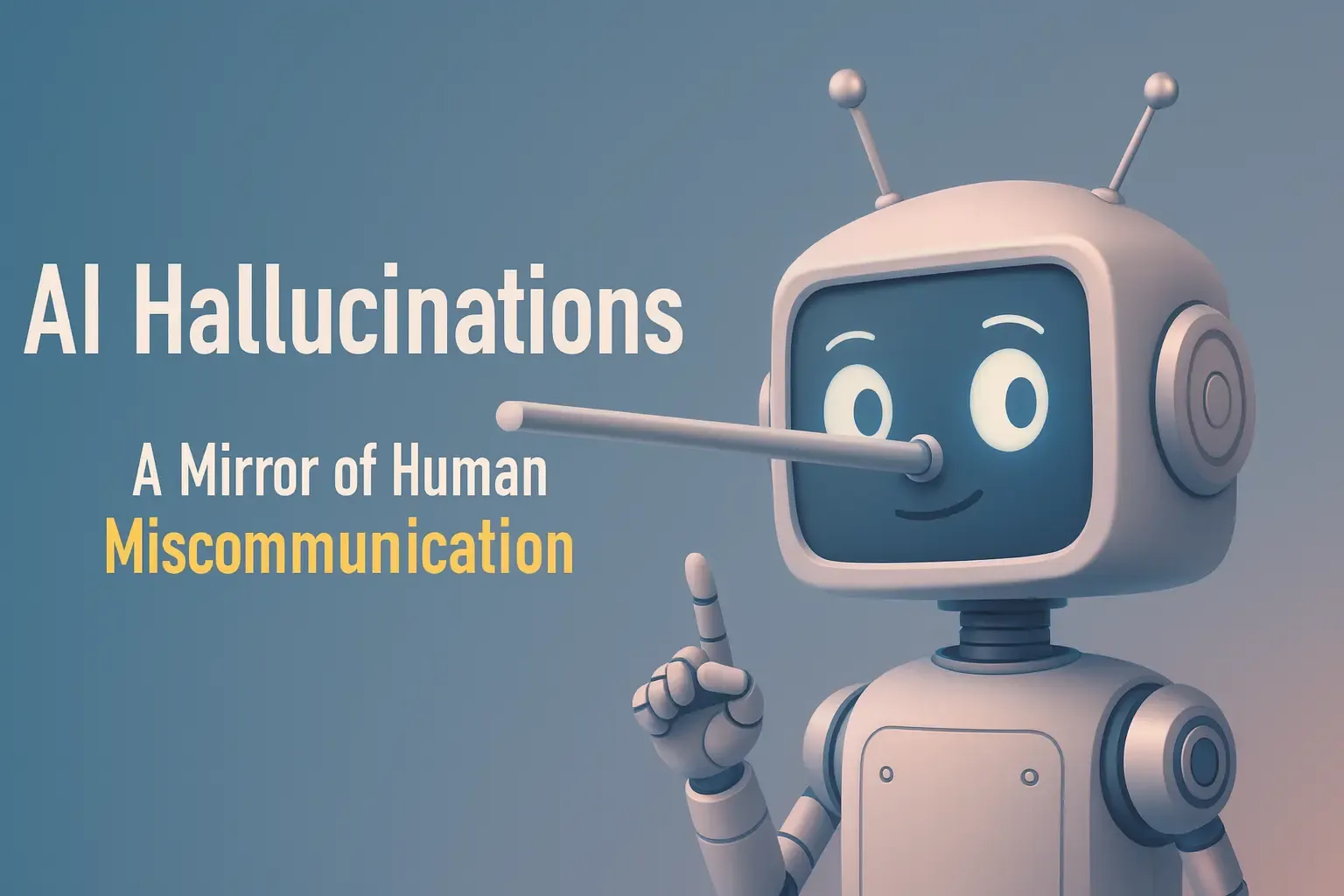

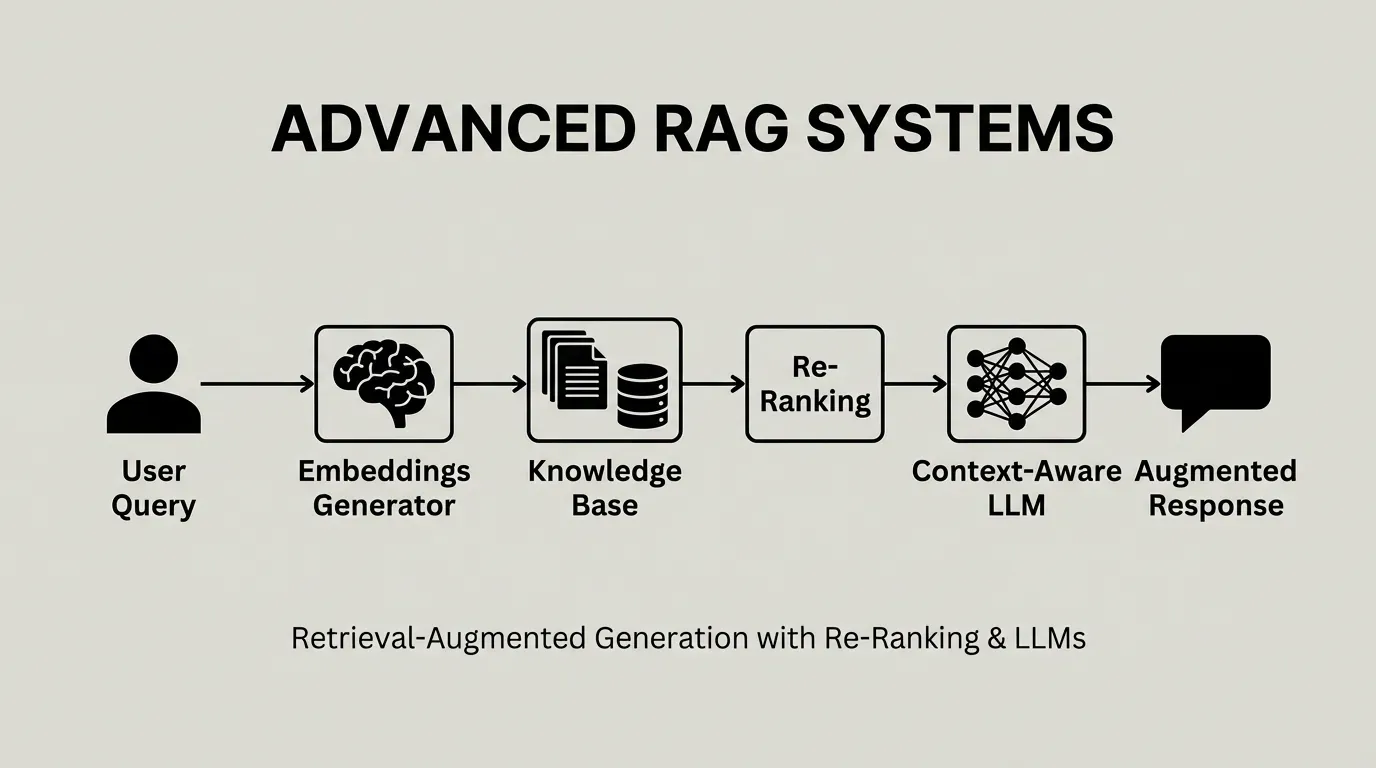

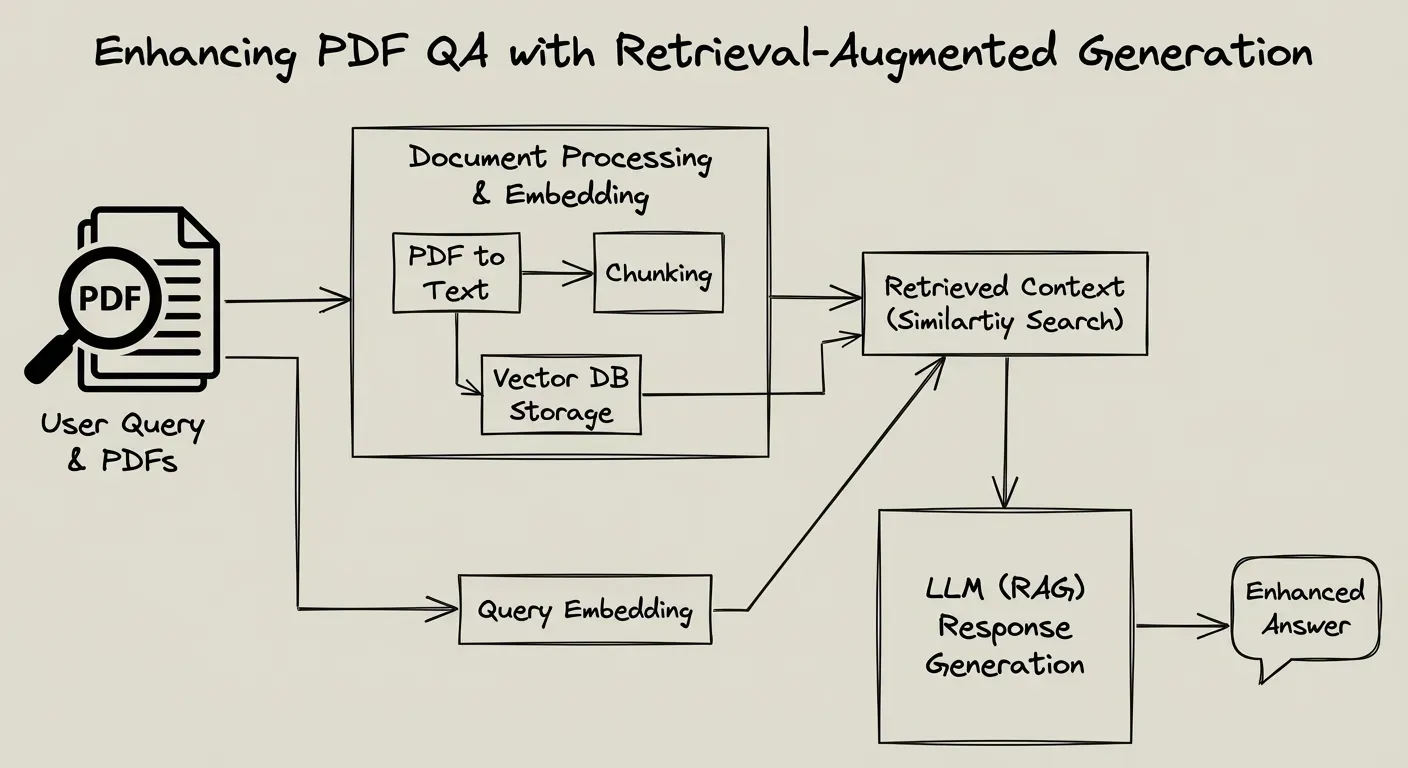

Build a developer copilot that answers with receipts and stays under 4% hallucination using retrieval augmented generation, structure aware chunking, version aware graphs, and conservative confidence thresholds.

Modern AI models advertise million-token context windows like they're breakthrough features. But research shows performance collapses as context grows. Here's why curated context and precise retrieval beat raw token capacity—and how we've already solved it.

Zcash pioneered zk-SNARKs, and Bytebell now makes developing on Zcash faster by unifying every line of cryptographic, protocol, and documentation knowledge into a single searchable graph—helping privacy projects cut onboarding time and eliminate technical debt.

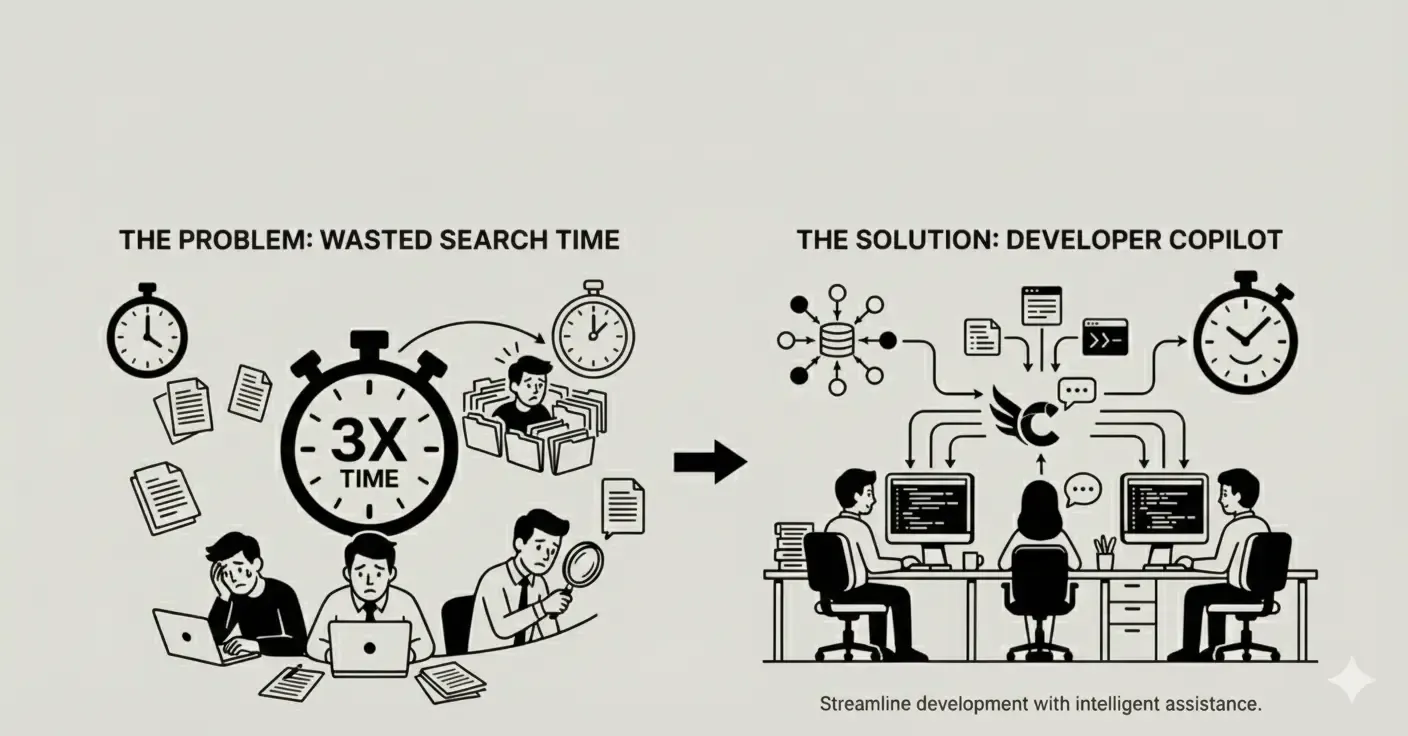

Knowledge workers waste 3X more time searching for answers than creating. Learn how context copilots eliminate fragmented knowledge, information decay, and trust deficits to help engineering teams work faster with source-backed answers.

Even with AGI, fragmented context and trust deficits will persist. Discover why source-bound answers, versioned memory, and knowledge infrastructure will be your competitive advantage in the next decade—and how to build it today.

Learn how to build a high‐performance Developer Copilot using Retrieval-Augmented Generation (RAG), vector databases, semantic search, and best practices for developer documentation search.

Overcoming Multi-Hop Reasoning & Temporal Challenges with Hybrid Retrieval & Multimodal Integration (84% Accuracy Boost)

The universe's origins have fascinated humanity for centuries. From ancient myths to cutting-edge scientific theories, understanding how the universe came into existence has always been a central question for explorers and thinkers alike. This article dives into the Big Bang Theory, the most widely accepted explanation for the universe's birth.

Discover how to integrate the Model Context Protocol (MCP) into your Developer Copilot for real-time data fetch, secure action workflows, and seamless AI-driven developer automation.

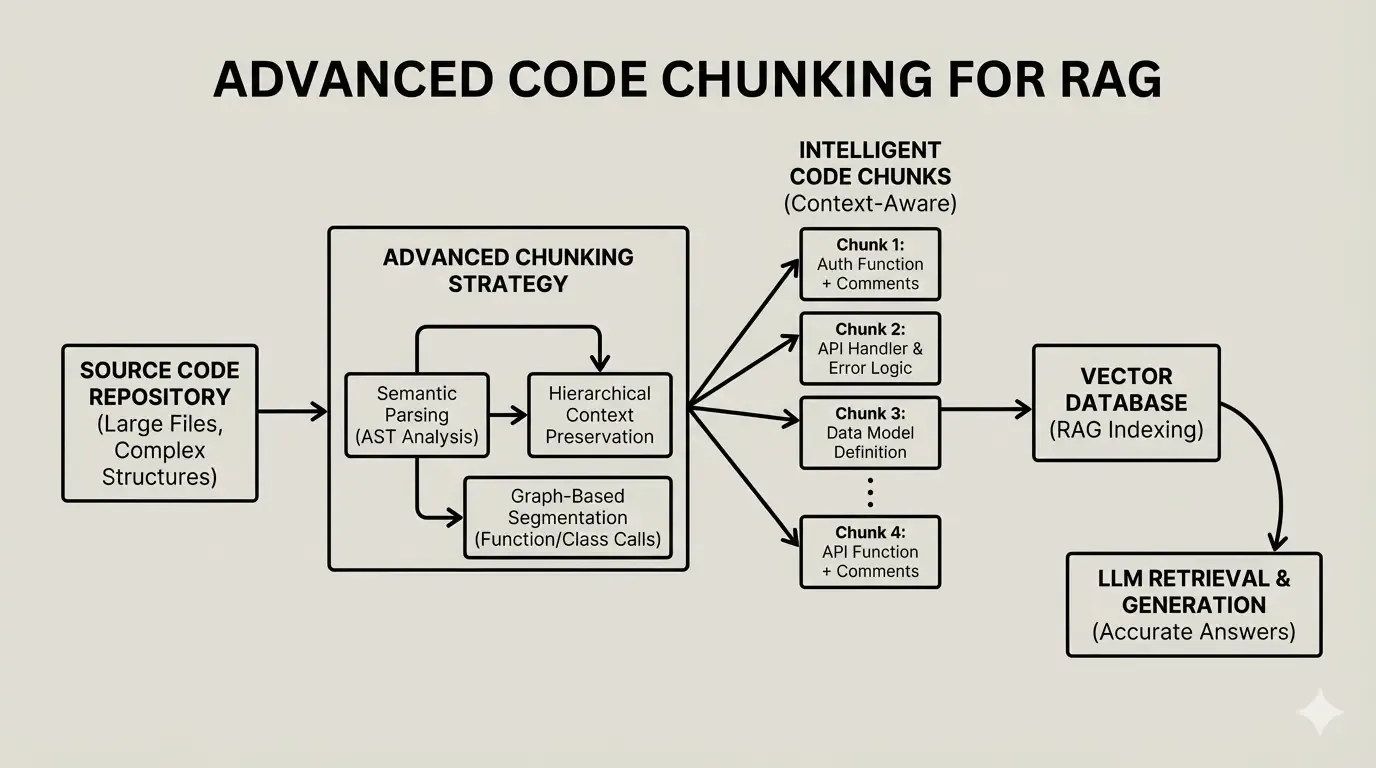

Latest research in code retrieval-augmented generation reveals transformative approaches that significantly outperform traditional TreeSitter-based systems.

Technical developer documentation can prompt a wide range of user questions – from straightforward look-ups to complex analytical queries. Below we categorize some of the most challenging question types and why they are difficult, with examples for each