Posts from 2026

3 posts published

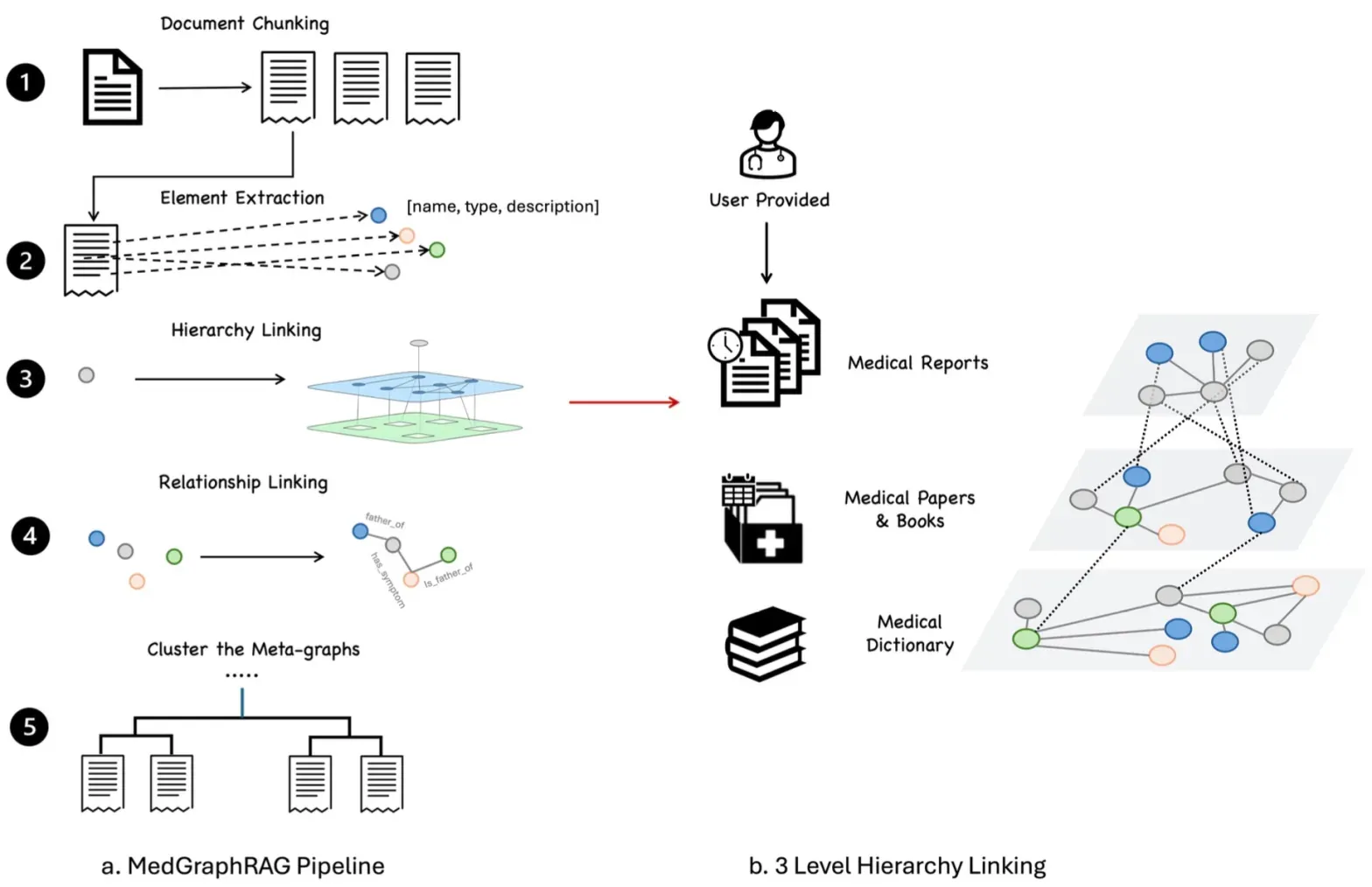

End-to-End System Evaluation: The Stress Test of GraphRAG

Individual layers may pass, but systems often fail at the seams. This blog details how to conduct holistic 'System-in-the-Loop' tests, measuring how retrieval noise compounds into generation errors across 25+ repositories. We provide a blueprint for evaluating the full journey from a vague natural language query to a multi-repo pull request.

Evaluating Generation and Grounding in Multi-Repo Systems

Retrieving nodes is only half the battle; the LLM must synthesize code that adheres to cross-repo constraints. This post explores measuring faithfulness, checking execution-level correctness against internal SDKs, and using LLM-as-a-Judge to verify that generated code respects the security and type contracts of separate repositories.

Why "Context Graph" Has Become the Most Misunderstood Term in AI Engineering

A technical deep-dive into LLM context management, the computational limits of context windows, and why every AI tool's 'context graph' solution might just be clever marketing around semantic search and RAG.